The United States Navy has long used wargames as a training method, and recent developments in AI have made naval wargaming more advanced than ever. As technological advancements continue to shape the conditions of modern warfare and strategic decision-making, the Navy is seeking innovative solutions to enhance their wargaming capabilities. Enter the world of LLM AI, a cutting-edge technology that is transforming the way we approach wargaming scenarios.

At Sentient Digital, Inc., we understand the paramount importance of leveraging advanced technology to address the complex challenges faced by modern warfighters, and the need for realistic training to ensure readiness. For this reason we have developed Fleet Emergence, which is revolutionizing wargaming for the Navy.

Large Language Model (LLM) AI, represents a monumental leap forward in the field of artificial intelligence. Based on extensive knowledge and data, LLMs can comprehend and process vast amounts of information in real-time, allowing it to simulate and predict multifaceted scenarios with unprecedented accuracy. In the context of wargaming, this means we now have the ability to simulate ever more complex military operations, strategic decision-making, and geopolitical scenarios with remarkable fidelity.

Our Fleet Emergence platform represents a paramount example of LLM AI in modern wargaming, including its role in scenario development, decision support, and strategic planning. In this article, our Senior Artificial Intelligence Research Scientist, Gene Locklear, will discuss how Fleet Emergence can provide invaluable training simulations, ultimately enhancing the decision-making process, and enabling the Navy to anticipate and respond effectively to rapidly evolving threats and challenges.

This deep dive into the cutting-edge details of our innovative AI-based naval wargames platform will give you a behind-the-scenes look at one of the many exciting ways Sentient Digital is supporting the warfighter. Explore how this groundbreaking technology is set to revolutionize the strategic landscape for the modern Navy. Discover how our advanced LLM AI-powered naval wargaming solution is reshaping the approach to training and tactical simulations in an ever more intricate and challenging global environment.

Modern Naval Wargaming as a Fleet Training Tool

Modern naval wargaming emulates naval warfare scenarios using advanced simulation technologies. It allows for honing of tactical skills, testing new technologies, and evaluating strategic decisions in a controlled, non-lethal, cost-efficient environment. Naval wargame simulations encompass a software representation of a wide range of platforms, from ships and submarines to aircraft and coastal defenses. These simulations allow naval commanders to strategize and develop operational plans to achieve maritime objectives, considering factors like the enemy’s fleet composition and the Operational Environment (OE). Highly realistic models replicate ship performance, sensor platforms and ship weaponry using advanced computer algorithms that can calculate ship sensor capability, weapons employment and engagement outcomes.

To further advance the sophistication of naval wargame simulations, Sentient Digital has introduced Fleet Emergence representing a paradigm shift in naval wargaming simulation by leveraging the power of Large Language Models (LLMs) layered underneath an Artificial Cognitive Intelligence (ACI) architecture. With its physics-based algorithms and highly developed graphics, Sentient Digital’s platform is a huge step in reshaping naval combat simulations at both the tactical and operational level.

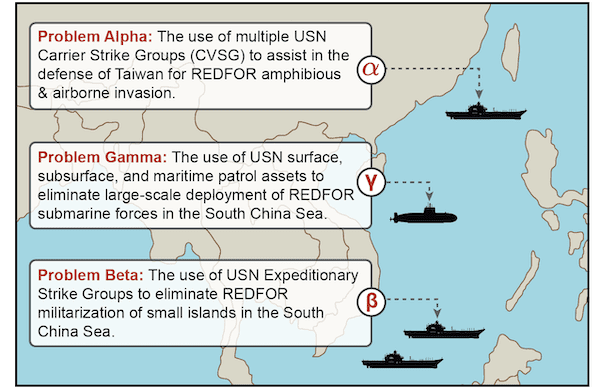

Fleet Emergence’s development was an outgrowth of our research to design scenario analysis of three U.S. naval fleet problems involving large scale land-based air and naval surface forces in the defense of Taiwan and Freedom of Navigation in the South China Sea. As our research is designed to consider large-scale air and naval operations, we formulated the following three hypothetical Fleet Problems that are core of the Fleet Emergence simulation, shown in this graphic.

How does Fleet Emergence Work?

By integrating a LLM with an ACI architecture, Fleet Emergence builds a highly detailed and configurable naval warfare simulation that not only enhances the realism and ease of interaction for naval simulation but also its versatility as a training tool. This is accomplished, in large part, by having a text-based interface that understands military orders and planning along with an “opponent” AI that is designed to replicate (without the need for configuration) the expected naval threat in a range of scenarios.

Fleet Emergence does this through a system consisting of four major design concepts:

Natural Language InterfaceThe LLM layer serves as the natural language interface for the simulation because it can both understand and generate human language. This allows the naval commanders to interact with the simulation using plain language commands and queries. |

|

Dynamic Scenario GenerationThe LLM can create highly detailed scenarios and take in scenario designer’s input for the simulation. It can also be pre-trained on historical naval data to provide context and insights during the simulation. |

|

Real-time Communication & ReportsThe LLM can generate and receive real-time reports and communications as well as introduce variability in its reports to mimic the uncertainty and diverse sources of information present in real-world intelligence. |

|

Adaptive Opponent BehaviorFleet Emergence encompasses cognitive capabilities (ACI) that can leverage the LLM’s natural language processing. This enables the AI to learn from its interactions with the human player remembering past tactics, strategies, and outcomes, allowing it to adjust its approach based on previous experiences with that naval commander.

|

The Artificial Cognitive Intelligence System

This ACI component of Fleet Emergence is a class of AI system that aims to replicate human-like cognitive abilities synthetically. Fleet Emergence can process information, learn, reason, problem-solve, and interact with the simulation environment. The ACI system involves a structured process where initial simulation objectives and scope are defined by scenario parameters that outline the cognitive abilities and tasks the system will need to perform. Relevant information about the specific scenario is preprocessed and feature engineering is employed to create meaningful inputs to the system. Subsequently, the ACI system undergoes training, where it learns from patterns in the data. Validation and evaluation metrics are employed to assess performance, prompting fine-tuning and optimization. Fleet Emergence ACI has multiple cognitive functions (planning, analysis, communication, deception) which are fully integrated.

The key concepts of the ACI are:

- Natural Language Processing (NLP)

This allows for text-based commands and visual decluttering through the use of a CLI. The ACI is enhanced in this area by the LLM layer.

- Machine Learning and Pattern Recognition

The ACI system can identify trends, make predictions, and classify information based on similarities. This allows for predicting enemy course of action as well as identifying the nuances of the ‘cognitive processes’ of the human opponents. This novel capability, combined with an ACI that can explain its decision making, is used to ‘profile’ human naval commanders showing their strengths and weaknesses.

- Reasoning and Problem-Solving

The ACI system applies logic and reasoning to solve complex problems through a process known as chunking. Chunking, in simple terms, is the ability to define a single complex problem into a sequence of simpler ones. This allows Fleet Emergence to formulate plans and make decisions based on a set of objectives and constraints. This is crucial for tasks like autonomous navigation, emission control, and weapon-target allocation. While not as advanced as human creativity, the ACI system has substantial ability to generate emergent tactics, plans, or actions within a set of predefined parameters.

- Long-Term Memory and Doctrinal Understanding:

The ACI system can retain and recall information from previous engagements or actions allowing it to build on or validate past knowledge and make informed decisions. This concept allows Fleet Emergence to do detailed ‘After-Action’ analysis on single scenarios and well expose trends in operational techniques used over many scenarios.

- Explainability and Interpretability

The ACI system is designed to provide explanations for its decisions and actions, allowing the human player to understand its reasoning processes. Feedback and Debriefing after a simulation, is integrated into the system and it can generate detailed reports and debriefings using natural language. It can analyze performance, highlight areas for improvement, and provide constructive feedback to players.

Integration of a Large Language Model with the ACI

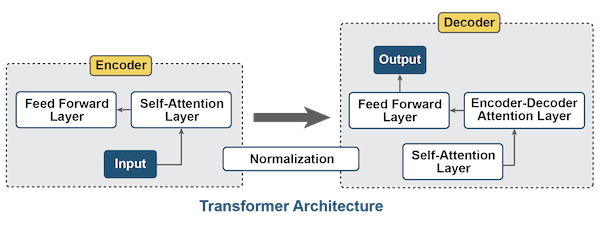

The Large Language Model, layered in Fleet Emergence is a generative artificial intelligence system that has been trained on very large amounts of textual data to provide language assistance to the naval commander in the simulation. It uses a deep learning artificial neural network (ANN) architecture known as a transformer.

The process follows this general sequence:

First, each word in both the input and output sequences are represented as a numeric vector through an embedding layer. Word embedding represents words in such a way that semantically similar words are arranged closer in a continuous vector space (all possible input). This enables the LLM to capture context and meaning for the tasks the naval commander is communicating to the model. Next the LLM computes the attention scores, for each word in an output sequence, with respect to the words the naval commander used in the input sequence. These scores indicate how much focus the model should place on each word, in the input, when generating the corresponding word in the output. Since transformers don’t actually understand the order of words in the input sequence, positional encodings are added to the input word embeddings so that the LLM can associate information about the position of each of the input elements (words). Subsequently, the attention scores are used to compute a weighted sum of the encoder’s outputs. This weighted sum represents the information from the input sequence that is most relevant for generating the current word in the output sequence and finally, the LLM uses this weighted information along with its internal state to generate the next word in the output sequence.

Large Language Models make use of a transformer; its key components and constructs are:

- Attention Mechanism

This allows the ANN to weigh the importance of different parts of the input when making predictions or decisions. When the naval commander creates operational or tactical orders, in text form, the attention mechanism can focus (pay the most attention to) certain parts that are the most relevant to the current task. This is what gives the transformer architecture the ability to capture long-range dependencies in the data.

- Feedforward Neural Network

In addition to the attention mechanism, transformers also include feedforward neural networks. These are standard neural networks that process the output from the attention layer. They add a non-linearity to the model’s encodings, allowing it to learn complex data patterns.

- Layer Normalization Mechanism

Additionally, transformers utilize layer normalization and residual connections processing to stabilize and accelerate training. Layer normalization helps in ‘mean centering’ the activations of each layer, and this improves the training process. Residual connections allow for the direct flow of information through the network, which helps in avoiding the vanishing gradient problem that results from the large numbers of probability calculations required for the embeddings.

- Self-Attention and Cross-Attention

Transformers use “Self-attention” to different positions in the input sequence to compute a representation for each position. This is crucial for understanding the context within the input. Cross-attention is used when the model needs to understand the relationship between different sequences.

Training the Integrated Large Language Model

In Fleet Emergence the LLM is trained in two parts:

In the pre-training phase, the LLM is trained on a Joint Operation and U.S. Naval doctrine (that is publicly available from the internet). It learns to understand naval terminology, acronyms, and formats of naval reporting.

In the fine-tuning phase, the LLM is trained on tactics, techniques, and procedures of naval combat created by naval experts following specified use cases. The fine-tuning phase gives rise to transfer learning where knowledge gained from solving one tactical/operational problem is applied to a different but related one. In the context of the LLM, this allows for emergent learning of concepts that were unknown to the model or in some cases both the model and the naval expert.

Sentient Digital’s Contribution to Naval Wargame Simulation

SDi’s naval wargame simulation merges advanced Artificial Cognitive Intelligence (ACI) with a powerful Large Language Model (LLM) to revolutionize maritime strategic planning. This fusion elevates simulation realism and leverages the LLM’s capabilities for configurable, dynamic scenarios. The result is a cutting-edge training tool, offering high-quality realism, adaptability, and intelligence in naval wargaming.

Stay on Top of Developments in Artificial Intelligence, in Naval Wargaming and Beyond, with Sentient Digital

The landscape of modern naval wargaming is undergoing a profound transformation, thanks to the advent of LLM-AI powered cutting-edge technologies such as Fleet Emergence. The power of AI-driven decision support systems, scenario generation, and adversary modeling is revolutionizing how the military trains its personnel, just as AI is transforming many aspects of modern life. Our commitment to staying at the forefront of this dynamic field enables us to deliver innovative solutions that empower our clients to gain and maintain a competitive edge.

To learn more about the ever-expanding possibilities of AI and to keep up with the latest developments, check out Sentient Digital’s blog. Our thought-provoking articles provide in-depth case studies and expert insights on subjects such as the intersection of AI and national defense, as well as government and private sector applications of AI, will enrich your understanding of the field. At Sentient Digital, we custom tailor technology solutions to further our clients’ objectives, always prioritizing high quality. If you are interested in partnering with us, contact us to learn more.